Back from our two-week winter break. Feels good to start writing again. Quick recap: we have dedicated Q1 of this year (2023) to the different components of a data strategy (try to search the internet – nobody has really attempted to develop a gold standard for a data strategy). In this post, we are tackling a very important one: the data-reintegration challenge. Why I think this is important?

In short: it has to do with a trend that will only pick up more speed as we go. Most domain-experts know it as “data proliferation,” or the increase in the Velocity, Variety, Volume and Value of data (referring to the five “Vs” of big data: the last one is veracity or “truth value” of data which is an important component of handling data – yet slightly out of scope of the point we’re making).

Why are we seeing such a massive increase in data? A number of societal trends are pushing this:

- Increasing adoption of cloud technology (with vendors priding themselves that they are using “triple mirroring” when storing data – making sure it is always available from any location)

- Increasing number of digitization projects being initiated

- Rise in customer willingness to use online methods

Data proliferation is further enhanced when companies are active in different geographical regions, have multiple business-critical systems (ERP, CRM, …) and have a hard time navigating vendor lock-in. The threats are substantial with:

- Increasing costs

- Increased pressure on the network

- Security issues (we also tackled this when we mentioned the responsibility matrix)

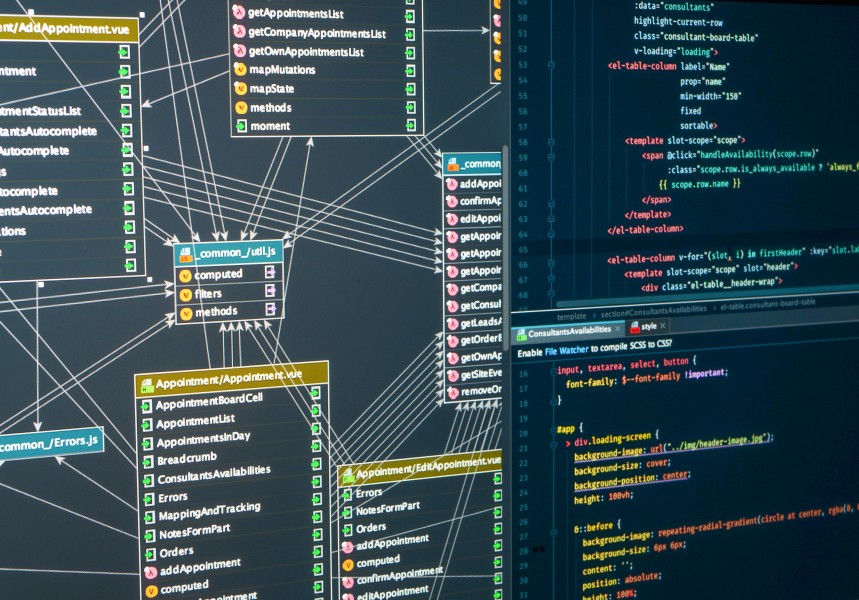

- Loss of info (when old datasets are being overwritten for example)

For the past decades, data re-integration has taken place in data warehouses (the “go to” for managing structured data) and data lakes (less structured but cheaper and more flexible). However, new technologies and concepts have seen increasing interest. Two concepts that deserve our attention are “data virtualization” which is a technical concept that refers to the ability to retrieve and manipulate data from the source without the need of knowing the technical details of the source data. A second concept is the “socio-technical” concept of “data mesh.” It goes beyond the technical and refers to the “duality of technology” (cf. Orlikowski) – a concept that described the idea that technical practices are embedded in broader social structures. Think about our responsibility matrix – a social construct – that would enable the formation of a data mesh (allowing teams to pick up on those data pockets that have value for them without the central data team becoming a bottleneck).

Data-reintegration is hard work and also needs to be a embedded in an architecture (and social structure) that allows for it. You can start small-scale (we do this everyday for sales organizations who want to see their important sales data in one place) or develop a broader vision (up to the level where a data mesh can be defined for your organization).

If you’d like to know how we can help we data-reintegration – give us a call.