Last week, we wrote about the use of a data strategy. This week, we want to educate our business audience on the types of data you can encounter in a business context. Data classification is a necessary part of any data activation plan. If you google it, you’ll most likely drown in the many subareas that classify data in their specific way, the most prevalent being statistics and software development. In this blogpost, we want to highlight those classes and categories that will most likely impact your data approach.

High-level, we can say that the most rudimentary difference is that between “quantitative” and “qualitative” data. While entire manuals exist on the topic of each of these (and their uses), one can – in very general terms – say that the former uses “numbers” to make sense of events and situations whereas the latter uses “words” to describe them. While there is still considerable debate about what class serves which purpose best, most would probably agree that qualitative data (like interviews transcripts, voice recordings, focus group reports, etc.) are useful for theory building whereas quantitative data helps with theory testing.

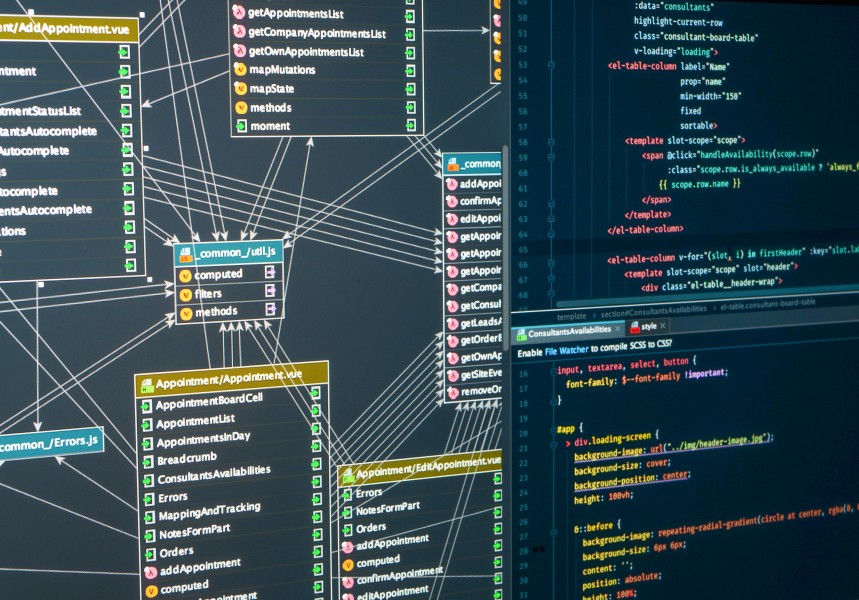

A second useful classification is that between structured, semi-structured and unstructured data. Most databases active in organizations are repositories for structured data. The best example is the standard relational database (e.g.: SQL) that uses tables (rows and columns aka records and fields) to organize data. A typical example might be “age” that can hold a value between 0 and say 120 (some people do get older than 100). Interesting detail: current estimates of the total data volume mention that less than 20% of all recorded data is of the structured kind. With the explosion of the internet (and the sharing of images, sounds, etc.), the volume of semi-structured and unstructured data has been steadily on the rise. Storing that information requires different types of data solutions such as NoSQL databases, datalakes or data lakehouses).

A third classification that you can use in classification strategies is that of machine vs human-generated data. We are all “produsers” of (digital) content. Every time we tweet, post on Instagram or upload a movie on YouTube, we “create” content. We sometimes even do it without being conscious about it (think about our “clicking behavior”). That being said, our own content is dwarfed by the tsunami of machine generated data that is produced (the volume of which will rise exponentially with the increased use of “smart” devices). A Tesla (or any other “smart car”) generates between 2 and 5 Terabyte of data every week. Humans will simply not be able to keep up with that. In modern factories, PLCs (programmable logic controllers) are doing exactly the same.

“The growth of memory demands will outstrip silicon supply presenting opportunities for radically new memory and storage solutions.”

As the velocity, volume and variety (the three “Vs”) of data generation rises, so does our need to come up with modern data storage and data handling capabilities. We could also say that the complexity of data increases as we are dealing with such things as high-dimensional data (used in face recognition technologies) and real-time data (some industries need to know as precisely as possible what the value of a given parameter in a given situation is). One of the promising new technologies that might prove important to cope with “big data” (a term used to designate volumes of data that are difficult to handle with traditional relational database models) is “knowledge graphs.” Are you struggling with performance issues or would you be interested to know more about the potential of this technology in your field, feel free to reach out and discuss the opportunity with us.